Let’s be honest—most companies dive into AI with stars in their eyes and a vague plan in their back pocket. They’re excited about the possibilities, inspired by success stories, and eager to transform their business. But here’s the reality check: without the right infrastructure foundation, your AI strategy won’t just struggle—it’ll likely crash and burn.

Think of infrastructure as the plumbing in your house. Nobody gets excited about pipes and water pressure, but try running a bath when your plumbing can’t handle it. That’s exactly what happens when companies try to scale AI without proper infrastructure planning.

In this article, we’re walking through seven critical infrastructure decisions you need to make before you scale your AI efforts. These aren’t theoretical concepts—they’re practical considerations that separate successful AI implementations from expensive failures. Let’s dive in.

Building a Robust Data Architecture Foundation

Your data architecture is where everything begins. You can have the smartest AI models and the most talented data scientists, but if your data infrastructure is a mess, you’re building on quicksand.

Assessing Your Current Data Infrastructure

Start by taking an honest look at what you already have. Where does your data live right now? Is it scattered across different departments, locked in outdated systems, or stored in formats that don’t play nice with modern AI tools?

Most companies discover they have more data silos than they realized. Your sales team has customer information in their CRM, your operations team tracks everything in spreadsheets, and your finance department guards their data like treasure. This fragmentation kills AI projects before they even start.

You need to evaluate whether your current storage systems can actually scale. Can they handle the volume of data you’ll be processing in two years? What about five years? AI applications are data-hungry beasts, and they only get hungrier as they grow.

Don’t forget about data quality. Garbage in, garbage out isn’t just a catchy phrase—it’s a painful reality. Check your data for consistency, accuracy, and completeness. If 30% of your customer records are missing key information, no amount of AI magic will fix that.

Choosing the Right Data Storage Solutions

Now comes the big question: where should all this data actually live? Cloud storage offers incredible flexibility and scalability. You can spin up resources when you need them and scale down when you don’t. But it also means your data is sitting on someone else’s servers, which raises security and compliance questions.

On-premises storage gives you complete control, but it also means you’re responsible for maintenance, upgrades, and capacity planning. Many companies find that a hybrid approach works best—keeping sensitive data on-premises while leveraging cloud storage for everything else.

You’ll also need to decide between data lakes and data warehouses. Data lakes are great for storing massive amounts of raw, unstructured data. Data warehouses are more structured and organized, making them ideal for specific analytics tasks. Most AI strategies need both. This is also where data lake consulting services become incredibly valuable, helping organizations design, optimize, and manage scalable storage environments that support long-term AI growth.

Data Governance and Organization

Here’s where things get serious. Who owns what data? Who can access it? How do you track changes? Without clear governance, your data architecture becomes chaotic.

Set up metadata management systems that help people find the data they need. Create clear protocols for data access—not everyone needs to see everything. Implement version control so you can track how data changes over time and reproduce results when needed.

Think of data governance as creating a library system. Without it, you have a room full of books with no way to find what you need.

Network Infrastructure and Connectivity Requirements

AI doesn’t work in isolation. It needs to move massive amounts of data around, and that requires serious network muscle.

Evaluating Bandwidth and Latency Needs

Training AI models means transferring gigabytes or even terabytes of data. Running real-time inference means getting responses back in milliseconds. Your network needs to handle both scenarios without breaking a sweat.

Calculate your bandwidth requirements honestly. If you’re training models on large datasets, you need high-speed connections between your data storage and compute resources. If you have distributed teams working on AI projects, they need reliable access to your systems.

Latency matters more than most people realize. For some AI applications—like fraud detection or autonomous systems—every millisecond counts. Assess your latency sensitivity and plan accordingly.

Enterprise Connectivity Solutions

This is where reliable telecommunications infrastructure becomes critical. You can’t build a robust AI strategy on shaky network foundations.

Enterprise-grade connectivity isn’t just about speed—it’s about reliability and consistency. When you’re running models that cost thousands of dollars per training run, you can’t afford network interruptions. For organizations handling data-intensive AI operations, solutions like M1‘s enterprise connectivity services provide the stable, high-speed connections that keep your AI workflows running smoothly, especially when you’re coordinating between distributed teams or managing cloud-based infrastructure.

You also need redundancy. What happens if your primary connection goes down? Having backup connectivity options isn’t paranoid—it’s essential for business continuity.

Don’t overlook security either. Moving sensitive data across networks means encrypting everything and implementing strict access controls. Your network should have multiple layers of security, not just a firewall at the edge.

Edge Computing Considerations

Sometimes the best place to run AI isn’t in the cloud—it’s right where the data is generated. Edge computing processes data locally, which dramatically reduces latency and bandwidth requirements.

Think about retail stores using shadcn AI for inventory management or factories using computer vision for quality control. These applications need immediate responses, not the delay of sending data to a distant cloud server and waiting for results.

Deciding what runs at the edge and what runs centrally is a strategic choice. You need connectivity between your edge devices and central infrastructure, but you’re not trying to send everything across the network all the time.

Compute Resources and Cloud Strategy

Now let’s talk about the engines that power your AI—your compute resources.

Determining Processing Power Requirements

AI models, especially deep learning models, need serious processing power. The question is: what kind and how much?

GPUs (Graphics Processing Units) excel at the parallel processing that AI models love. They’re the workhorses of most AI training operations. CPUs still matter for many AI tasks, particularly inference on smaller models. TPUs (Tensor Processing Units) are specialized chips designed specifically for AI workloads and offer incredible performance for certain applications.

Don’t just guess at your compute needs. Look at the models you plan to run, estimate training times, and calculate costs. A model that takes three days to train on CPUs might finish in three hours on the right GPU setup.

Cloud Infrastructure Selection

Should you go all-in on one cloud provider, spread across multiple clouds, or keep some computer on-premises? There’s no universal right answer, but there are smart ways to think about it.

Public clouds offer incredible flexibility and access to the latest AI tools. Private clouds give you more control and potentially better economics at scale. Hybrid approaches let you keep sensitive workloads on-premises while leveraging cloud resources for everything else.

Multi-cloud strategies help you avoid vendor lock-in and let you use the best tools from different providers. But they also add complexity—you need to manage multiple platforms and potentially move data between them.

Containerization platforms like Docker and orchestration tools like Kubernetes make it easier to move AI workloads between different environments. If you’re serious about AI at scale, these technologies aren’t optional.

Cost Optimization Strategies

AI compute costs can spiral quickly if you’re not careful. A single large model training run can cost thousands of dollars. Scale that up to dozens or hundreds of experiments, and you’re looking at serious money.

Use reserved instances or committed use discounts for predictable workloads. Spot instances work great for training runs that can handle occasional interruptions—you get deep discounts in exchange for flexibility.

Monitor everything. You’d be surprised how often companies leave expensive resources running 24/7 when they only need them for a few hours a day. Set up cost alerts and regularly review your spending.

Right-sizing is crucial. Don’t provision massive instances when smaller ones will do. And definitely don’t leave resources idle—shut them down when you’re not using them.

Talent and Expertise Gaps

Here’s an uncomfortable truth: technology is the easier part of AI infrastructure. Finding and keeping the right talent is much harder.

Internal Team Assessment

Be brutally honest about your team’s capabilities. Do you have people who understand modern data science practices? Can your engineers handle MLOps—the operational side of machine learning? Does anyone on your team actually know how to deploy and monitor AI models in production?

Most companies discover significant gaps. That’s normal. What matters is acknowledging them and making a plan.

Traditional IT skills don’t automatically translate to AI infrastructure management. Your excellent database administrators might not know anything about model serving platforms. Your software developers might be unfamiliar with machine learning workflows.

Strategic Partnership Approaches

When you lack internal expertise, trying to figure everything out yourself is expensive and slow. This is where getting outside help makes sense. Working with specialized AI consulting firms can accelerate your strategy development and help you avoid costly mistakes that others have already made.

Good consultants don’t just build things for you—they transfer knowledge to your team. Look for partnerships that include training and capability building, not just project delivery.

External expertise is particularly valuable for validating your strategy. When you’re investing millions in AI infrastructure, having experienced professionals review your plans can save you from fundamental mistakes.

The goal isn’t to depend on consultants forever—it’s to build your own capabilities while getting expert guidance through the critical early stages.

Building Long-Term Capabilities

External help is a bridge, not a permanent solution. You need to build lasting AI capabilities within your organization.

Create clear career paths for data scientists, ML engineers, and AI architects. These professionals are in high demand, and they won’t stick around if they don’t see growth opportunities.

Establish centers of excellence where AI knowledge concentrates and spreads throughout the organization. These groups become the go-to resources for AI questions and best practices.

Foster a culture of continuous learning. AI technologies evolve rapidly. What’s cutting-edge today might be outdated in two years. Your team needs time and resources to stay current.

Balance is key. You want to own your AI capabilities, but you don’t need to do everything in-house. Strategic vendor relationships and selective outsourcing let you focus on what matters most to your business.

Operational Readiness and Compliance Frameworks

You can have perfect technical infrastructure and brilliant people, but if you’re not operationally ready, you’ll still fail.

Regulatory and Compliance Considerations

AI operates in a complex regulatory environment, and it’s only getting more complex. Data privacy laws like GDPR and CCPA directly affect how you collect, store, and use data for AI.

Industry-specific regulations add another layer. Healthcare AI must comply with HIPAA. Financial services AI needs to meet strict regulatory requirements. Manufacturing AI might need to satisfy safety certifications.

Don’t treat compliance as an afterthought. Build it into your infrastructure from the start. That means implementing proper data handling procedures, maintaining audit trails, and documenting your AI decision-making processes.

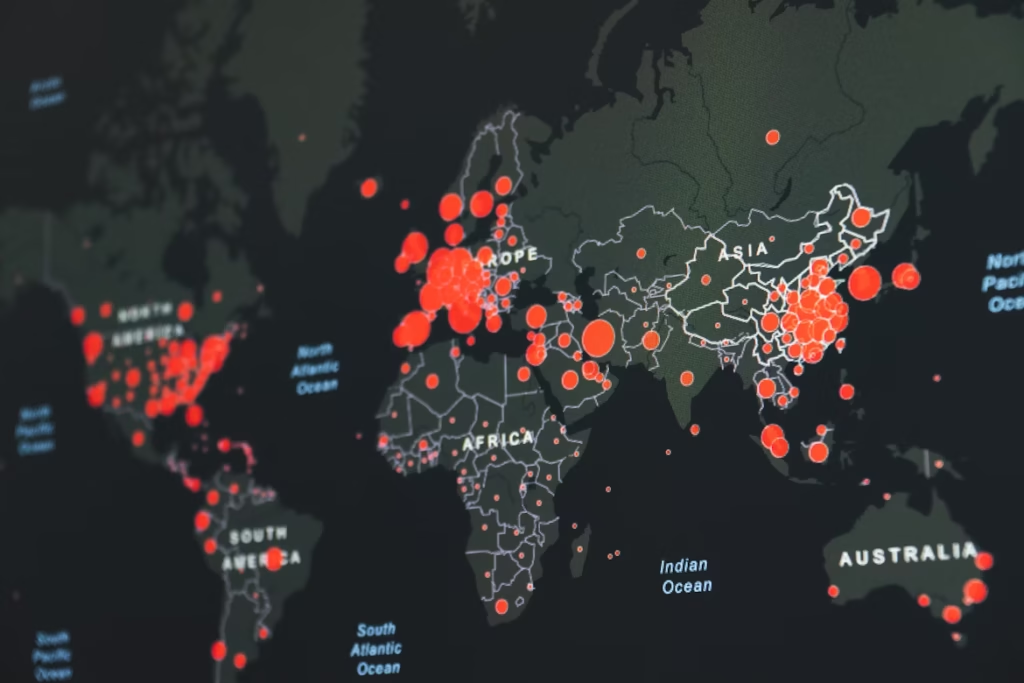

Cross-border data transfers deserve special attention. Different countries have different rules about where data can be stored and processed. If your AI strategy involves international operations, you need to understand these restrictions.

International Operations and Data Movement

Global AI initiatives face unique infrastructure challenges. You’re not just moving data—you’re coordinating across time zones, regulatory jurisdictions, and sometimes different technology stacks.

Data residency requirements might force you to keep certain data within specific countries. This affects where you can train models and where you can deploy them.

Physical infrastructure matters too. For companies deploying AI systems globally, logistics becomes surprisingly important. Getting specialized hardware to different locations requires careful planning. For enterprises managing international operations, efficient processes like online customs clearance for hardware shipments and equipment deployment become essential components of infrastructure strategy, especially when you’re racing against project deadlines and need AI hardware in multiple countries simultaneously.

Managing distributed teams adds another dimension. Your AI developers might be in one country, your data scientists in another, and your production systems in a third. Your infrastructure needs to support this complexity without creating bottlenecks.

Monitoring and Governance Systems

AI systems don’t just need deployment—they need ongoing monitoring and governance. Models can drift over time as data patterns change. Performance can degrade. Biases can emerge.

Implement comprehensive monitoring that tracks not just technical metrics like latency and throughput, but also business metrics like accuracy and user satisfaction. Set up alerts so you know immediately when something goes wrong.

Establish responsible AI governance frameworks. How do you ensure your AI systems are fair and unbiased? How do you handle edge cases and errors? Who’s responsible when an AI system makes a wrong decision?

Create clear incident response protocols. When something goes wrong—and eventually it will—everyone should know exactly what to do. Who gets notified? How do you investigate? What’s the process for fixes and updates?

Regular audits aren’t optional. Review your AI systems periodically to ensure they’re still compliant, performing well, and meeting business objectives.

Read More: Using Technology Mindfully – Helpful Dos and Don’ts

Conclusion

Building the right infrastructure for scaled AI isn’t glamorous work, but it’s absolutely essential. These seven decisions—data architecture, network infrastructure, compute resources, talent strategy, and operational readiness—form the foundation that determines whether your AI initiatives succeed or struggle.

The companies that succeed with AI aren’t necessarily the ones with the fanciest algorithms or the biggest budgets. They’re the ones that took time to build solid infrastructure foundations before trying to scale.

Start by honestly assessing where you are today. Which of these seven areas are strengths? Where are your weaknesses? Don’t try to fix everything at once—prioritize based on your specific AI goals and constraints.

Remember, infrastructure decisions you make today will affect your AI capabilities for years to come. It’s worth taking the time to get them right.